Top 25 Big Data Questions and Answers for Certification Passing score

The Big Data certification helps professionals who are planning to enter into the analytics space of big data. Big Data skill helps working professionals to take quick decisions without business analysis knowledge and experience. We have compiled big data questions and answers for you to recap the subject quickly. You can appear for big data certification exam with confidence and come out with certification. We have prepared a bunch of important big data exam questions along with the correct answer and the explanation for the right answer. Utilize these sample questions related to big data and answer them by practicing by yourself.

The Big data certification helps you to understand business process management, design and deliver solutions to bring change in the organization. Have Good luck.

Big Data Exam Details: The Big Data

Exam name: https://www.dasca.org/data-science-certifications/big-data-analyst

Big Data Exam Duration: 120 minutes

No. of Big Data questions: 100

Big Data Certification Passing score: 65%

Validated against: Data Science council of America

Big Data Test Format: Multiple choice questions

Big Data Exam price: $400

-------------------------------------------------------------------------------------------------------------------------

Top 25 Big Data certification questions and answers

Question 1

Which one of the following options is the real-time application of big data (Hadoop)?

- Media information management

- Software development

- Time management

- None of the above

Description

Hadoop is a popular open source application of big data that is used in computing a large amount of information and data. Along with that, it is used in the management of media files such as audio, video text and images etc. All these media files can be stored and distributed to other devices using the big data platform. Furthermore, it improves the performance of the data along with an effective analysis of the unstructured and structured both types of information and data. There are numerous platforms where big data is used such as performance management, advertising, cybersecurity and some kind of research where a lot of information is required for gathering the facts.

------------------------------------------------------------------------------------------------------------------------------

Question 2

Which one of the following options is not the mode where Hadoop can be run?

- Standalone mode

- Hibernate-based mode

- Pseudo-distributed mode

- Fully distributed mode

Hadoop can run into multiple modes such as the standalone mode, fully distributed mode and pseudo mode. The default mode for Hadoop to run is the standalone mode where a local file system is used for the output and input operations.

------------------------------------------------------------------------------------------------------------------------------

Question 3

Which one of the following options is the service by MapReduce framework that creates cache files whenever required?

- Data transmission

- Distributed cache

- Information forecasting

- All of the above

Whenever a data file gets cached for some operation, the Hadoop ensure its availability at every data node in the memory and the system both. This helps later in accessing the file and read the cache file in the code. Distributed cache is advantageous as it does tracking of the modification timestamps within the cache files. If the operation is being executed currently, then it will stop the modification proves of the cache files.

------------------------------------------------------------------------------------------------------------------------------

Question 4

Which one of the following options is the application of the Name Node in big data?

- Metadata management

- Information analysis

- Interpreting errors

- None of the above

Metadata is the information that consists of the location of the information and at which data node it is stored. The Name node follows a tree structure where all information and data related files are stored in a big data cluster. Name nodes also keep tracking of the latest checkpoint related to the namespace.

------------------------------------------------------------------------------------------------------------------------------

Question 5

Which one of the following options is not an input format in big data?

- Text input format

- Sequence file

- Key value input format

- Media input format

The text input format is mostly used the format in the big data and the key value input format is used regarding plain text files where the information is broken into lines. Another input format is the sequence format that helps to read the files within a specific sequence. These input formats are usually used for various kinds of operations in Hadoop. These input formats help to find specific information from a large amount of database.

------------------------------------------------------------------------------------------------------------------------------

Question 6

In big data, which one of the following options is the core method for reducer?

- Cleanup ()

- Reduce ()

- Setup ()

- All of the above

The setup method helps in the configuration of the distinct parameters such as distributed cache and the input data size. The other reducer method reduces method that is called only once per task. The cleanup method helps to clean the temporary files that get created automatically while performing an operation. The deletion of these temporary files is only done at the end of the operation.

------------------------------------------------------------------------------------------------------------------------------

Question 7

Which one of the following options define the role of job tracker in big data?

- Resource management

- Clustering

- Task execution

- None of the above

The primary function of the job tracker is the management of the resources and keeps track of the availability of the resources along with the management of the life cycle. The entire process runs on a distinct node. It communicates with the name node for identifying the location of the information and data. Furthermore, it identifies the best task tracker for executing the tasks. It submits the operations to the client and does monitoring for the individual task trackers.

------------------------------------------------------------------------------------------------------------------------------

Question 8

Which one of the following options define the use of Record reader in big data?

- Read slit data into record

- Manage information records

- Read input values of the task

- None of the above

The Data gets split into different blocks into the big data where the record reader reads these slit data within a single record. If the sentence is split into 2 rows then the record reader will read them as a merged sentence. For example, row 1 has “hello” written and row 2 has “world” then the record reader will read it as “hello world”.

------------------------------------------------------------------------------------------------------------------------------

Question 9

What will happen if a task is executed in big data (Hadoop) with an already existing output directory?

- Exception message will occur about already existence of the directory

- Task will delete the directory

- Execution will be aborted

- None of the above

Sometimes the directory already exists in the system and when a task is executed with an output directory same as the existing one, there will be an exception message occur that will say that output file directory already exists. For running the task, it needs to be ensured that the output directory does not exist before.

------------------------------------------------------------------------------------------------------------------------------

Question 10

Which one of the following option is not part of the five Vs of big data?

- Volume

- Variety

- Vitality

- Veracity

Big data is a large collection of information and data that can be structured and complex unstructured both. There are many open source tools are used such as Hadoop. There are total of five Vs of big data that are volume, velocity, value, veracity and variety. This consists of everyday data growth that consists of conversations on different media platforms. Along with that, big data takes care of the accuracy of the data available.

------------------------------------------------------------------------------------------------------------------------------

Question 11

Which one of the following options is not the component related to big data?

- Map Reduce

- HDFS

- YARN

- Policy management

Big data is mostly used along with some open source applications that store, process and analyze the complex data sets. Map reduce is a programming model that does processing of large sets of data in parallel. HDFS is more of a distributed file system that is based on Java. YARN is a resource management framework that also takes care of the requests from distributed applications. Big data does not include policy management in their operations and tasks.

------------------------------------------------------------------------------------------------------------------------------

Question 12

Which one of the following options is the command that is used for checking the condition of the file distribution system when files get corrupt or unavailable?

- Map-reduce

- FSCK (file system check)

- YARN

- None of the above

In the Hadoop summary report, a file system check is used for describing the state of the file distribution system. FSCK check the errors in the file system but does not solve these problems. This command can be used on a specific file subset or on the entire system. Traditional utility tool of FSCK does not only find the errors but also solve them.

------------------------------------------------------------------------------------------------------------------------------

Question 13

Which one of the following options is the command that is used for testing if all Hadoop daemons are running perfectly or not?

- YARN

- HDFS

- JPS

- None of the above

The main use of JPS command is to identify if the working of Hadoop daemons is being done correctly or not. It specifically identifies is there are some daemons in the Hadoop such as node manager, resource manager, data node, name-node etc. It helps to identify the problems and find out some solutions to them both at the same time.

------------------------------------------------------------------------------------------------------------------------------

Question 14

Which one of the given options is the reason why Hadoop is required for big data analytics?

- Hadoop offers processing, storage and data collection

- It stores data in raw form

- It is feasible from an economic viewpoint

- All of the above

Mostly, it gets difficult to analyze and explore a large amount of information and data in lack of proper tools of data analysis. Hadoop is the most effective tool that offers data collection, processing and storage capabilities. It stores the information in its raw form so it keeps the information as it is. This is open source software that can run on commodity hardware. This is also feasible economically so used by various companies and organizations.

------------------------------------------------------------------------------------------------------------------------------

Question 15

Which one of the following options is the function of Hadoop in which data is moved into different clusters instead of bringing them to the location of processing Map reduce algorithm?

- Data processing

- Data locality

- Data tracking

- Data submission

Data locality is the most used feature of Hadoop in big data analytics it moves the computation to data rather than data to computation. All the information and data is moved within the clusters instead of moving them to the location where processing and submission of Map-reduce algorithm is being done.

------------------------------------------------------------------------------------------------------------------------------

Question 16

Which one of the following options is the operation of big data analytics that is done on the basis of the respective sizes of the data blocks?

- HDFS Data block indexing

- Task execution

- Information management

- None of the above

Indexing of data blocks is done by HDFS according to the size of the data blocks. The data blocks are stored by the Data Nodes when the Name Node manages the data blocks. Clients get information regarding the data blocked from Name node within a few time.

------------------------------------------------------------------------------------------------------------------------------

Question 17

Which one of the following options is not the data management tool that is used along with edge modes within Hadoop?

- Oozie

- Ambari

- Flume

- Azure

There are multiple tools of data management that are used with edge modes in big data analytics such as Oozie, Ambari, flume, hue, pig etc. These tools are the most popular tools that manage information and data effectively. For some advances level data management, there are some other tools used in Hadoop that are Hcatalog, Avro, Bigtop etc. These tools have the capability to manage a large amount of information and data as compared to the other data management tools.

------------------------------------------------------------------------------------------------------------------------------

Question 18

Choose one of the following options is not a tombstone marker that is used for deletion in HBase?

- Cluster Delete Marker

- Family Delete Marker

- Column Delete Marker

- Version Delete Marker

The family Delete Marker is used for marking the columns of the column family and the Column Delete Marker is used for marking all versions of a single column. Furthermore, the Version Delete Marker does marking of a single version or a single column. These are the mostly used tombstone markers that are used in the deletion process within the big data.

------------------------------------------------------------------------------------------------------------------------------

Question 19

Which one of the following options is the big data analytics method that is mostly used by many organizations in order to increase their business revenue?

- Predictive analytics

- Data collection

- Clustering

- None of the above

Big data analytics now has become the most effective entity for business organizations. In big data, predictive analytics is the most effective way to provide customized recommendations to the business and suggest ways to increase the business revenue. The big data analytics provide suitable preferences to the company so they can make products according to the requirements of the customers. By implementing the big data analytics in the business, there are chances of almost 5-20% increment in the business revenue.

------------------------------------------------------------------------------------------------------------------------------

Question 20

What if two different users try to get access to a similar file in HDFS?

- Both users can access the file

- Only the first user will get access to the file

- The second user will get access to the file

- Both users will be rejected

HDFS only supports the exclusive write so the first user can get access to the file and permission will be granted to the user but the second user will not be able to access the same file.

------------------------------------------------------------------------------------------------------------------------------

Question 21

Which one of the following options is an algorithm that is applied to the Name Node for deciding how to place blocks and their replicas?

- Rack awareness

- Performance enhancement

- Predictive algorithm

- None of the above

Rack awareness is an algorithm which is used for the Name Node for making a decision about the placement of data blocks and their replica. The minimization of network traffic is done according to the rack definitions between the data nodes that are situated in the same rack. This algorithm helps to identify whether different copies of data does not get stored in wrong racks.

------------------------------------------------------------------------------------------------------------------------------

Question 22

In a map-reduce framework, what is the role of distributed cache?

- Creates cache files for applications

- Deletes temporary files

- Locate file location

- None of the above

The distributed cache can be determined as a feature of the map-reduce framework for cache making process for applications. The Hadoop creates cached files for the operations and tasks being executed on data nodes. The files of information and data can get access to the cache files in the form of a local file in the task.

------------------------------------------------------------------------------------------------------------------------------

Question 23

What if there is no information or data within a name node?

- An empty name node

- No existence of name node without data in it

- Name node will stay as it is

- None of the above

There is no existence of name node without data in it. If there is a name node present then it is essential that there is some information stored. Without the information or data, the name node gets deleted automatically. Name node is meant to store information in them and there is no empty name node can be created within the big data analytics.

-----------------------------------------------------------------------------------------------------------------------------

Question 24

Which one of the following options is the solution to decrease the time that is consumed by the large clusters of Hadoop in the name node recovery process?

- Map-reduce framework

- FSCK method

- HDFS high availability architecture

- JPS command

The name node recovery process makes the Hadoop cluster run efficiently. This entire process consumes a lot of time when it comes to large Hadoop clusters. Due to this, routine maintenance gets much complex. In this situation, the HDFS high availability architecture is the best solution as it decreases the process time for large clusters and data blocks.

-----------------------------------------------------------------------------------------------------------------------

Question 25

Which one of the following options is the reason due to which the HDFS is suitable only for large data sets?

- Name node performance issues

- Cluster processing time

- Data corruption

- Information inaccuracy

The main reason is the performance issue of the name node. A huge space is allocated to the name node for storing the metadata for large files. For small files, the name node is not able to utilize the space and it causes problems in performance optimization. This is why HDFS is suitable only for the large information and data sets rather than small files.

Find a course provider to learn Big Data

Java training | J2EE training | J2EE Jboss training | Apache JMeter trainingTake the next step towards your professional goals in Big Data

Don't hesitate to talk with our course advisor right now

Receive a call

Contact NowMake a call

+1-732-338-7323Take our FREE Skill Assessment Test to discover your strengths and earn a certificate upon completion.

Enroll for the next batch

big data full course

- Dec 15 2025

- Online

big data full course

- Dec 16 2025

- Online

big data full course

- Dec 17 2025

- Online

big data full course

- Dec 18 2025

- Online

big data full course

- Dec 19 2025

- Online

Related blogs on Big Data to learn more

What is Big Data – Characteristics, Types, Benefits & Examples

Explore the intricacies of "What is Big Data – Characteristics, Types, Benefits & Examples" as we dissect its defining features, various types, and the tangible advantages it brings through real-world illustrations.

Top 10 Open-Source Big Data Tools in 2024

In the dynamic world of big data, open-source tools are pivotal in empowering organizations to harness the immense potential of vast and complex datasets. Moreover, as we enter 2024, the landscape big data tools and technologies continues evolving be

AWS Big Data Certification Dumps Questions to Practice Exam Preparation

Certification in Amazon Web Service Certified Big data specialist will endorse your skills in the design and implementation of the AWS services on the data set. These aws big data exam questions are prepared as study guide to test your knowledge and

Sixth Edition of Big Data Day LA 2018 - Register Now!

If you’re keen tapping into the advances in the data world, and currently on a quest in search engines, looking for Big Data conferences and events in the USA, there is a big one coming up your way! Yes, the sixth annual edition of Big Data Day LA

15 Popular Big Data Courses to learn for the future career

We have found a list of big data courses that are necessarily required for the future. Professionals and freshmen who are learning these courses prepare the participants to see bigdata careers with high pay jobs.

Best countries to work for Big Data enthusiasts

China is fast becoming a global leader in the world of Big Data, and the recently held China International Big Data Industry Expo 2018

Top Institutes to enroll for Big Data Certification Courses in NYC

If achieving a career breakthrough is hard, harder is sustaining a long-run. Why? Organizations are focusing on New Yorkers who can work dynamically and leverage their skills from the word go, and that’s why.

The emergence of Cloudera

Cloudera is the leading worldwide platform provider of Machine Learning. There is reportedly an accelerated momentum in the Cybersecurity market.

Why there is a need to fill the skill gap to land in a Hadoop and Big Data career?

The world is witnessing the tremendous learning of Big Data platform and artificial intelligence associated with it. The demand for Analytics skill is going up steadily but there is a huge deficit on the supply side.

Latest blogs on technology to explore

From Student to AI Pro: What Does Prompt Engineering Entail and How Do You Start?

Explore the growing field of prompt engineering, a vital skill for AI enthusiasts. Learn how to craft optimized prompts for tools like ChatGPT and Gemini, and discover the career opportunities and skills needed to succeed in this fast-evolving indust

How Security Classification Guides Strengthen Data Protection in Modern Cybersecurity

A Security Classification Guide (SCG) defines data protection standards, ensuring sensitive information is handled securely across all levels. By outlining confidentiality, access controls, and declassification procedures, SCGs strengthen cybersecuri

Artificial Intelligence – A Growing Field of Study for Modern Learners

Artificial Intelligence is becoming a top study choice due to high job demand and future scope. This blog explains key subjects, career opportunities, and a simple AI study roadmap to help beginners start learning and build a strong career in the AI

Java in 2026: Why This ‘Old’ Language Is Still Your Golden Ticket to a Tech Career (And Where to Learn It!

Think Java is old news? Think again! 90% of Fortune 500 companies (yes, including Google, Amazon, and Netflix) run on Java (Oracle, 2025). From Android apps to banking systems, Java is the backbone of tech—and Sulekha IT Services is your fast track t

From Student to AI Pro: What Does Prompt Engineering Entail and How Do You Start?

Learn what prompt engineering is, why it matters, and how students and professionals can start mastering AI tools like ChatGPT, Gemini, and Copilot.

Cyber Security in 2025: The Golden Ticket to a Future-Proof Career

Cyber security jobs are growing 35% faster than any other tech field (U.S. Bureau of Labor Statistics, 2024)—and the average salary is $100,000+ per year! In a world where data breaches cost businesses $4.45 million on average (IBM, 2024), cyber secu

SAP SD in 2025: Your Ticket to a High-Flying IT Career

In the fast-paced world of IT and enterprise software, SAP SD (Sales and Distribution) is the secret sauce that keeps businesses running smoothly. Whether it’s managing customer orders, pricing, shipping, or billing, SAP SD is the backbone of sales o

SAP FICO in 2025: Salary, Jobs & How to Get Certified

AP FICO professionals earn $90,000–$130,000/year in the USA and Canada—and demand is skyrocketing! If you’re eyeing a future-proof IT career, SAP FICO (Financial Accounting & Controlling) is your golden ticket. But where do you start? Sulekha IT Serv

Train Like an AI Engineer: The Smartest Career Move You’ll Make This Year!

Why AI Engineering Is the Hottest Skillset Right Now From self-driving cars to chatbots that sound eerily human, Artificial Intelligence is no longer science fiction — it’s the backbone of modern tech. And guess what? Companies across the USA and Can

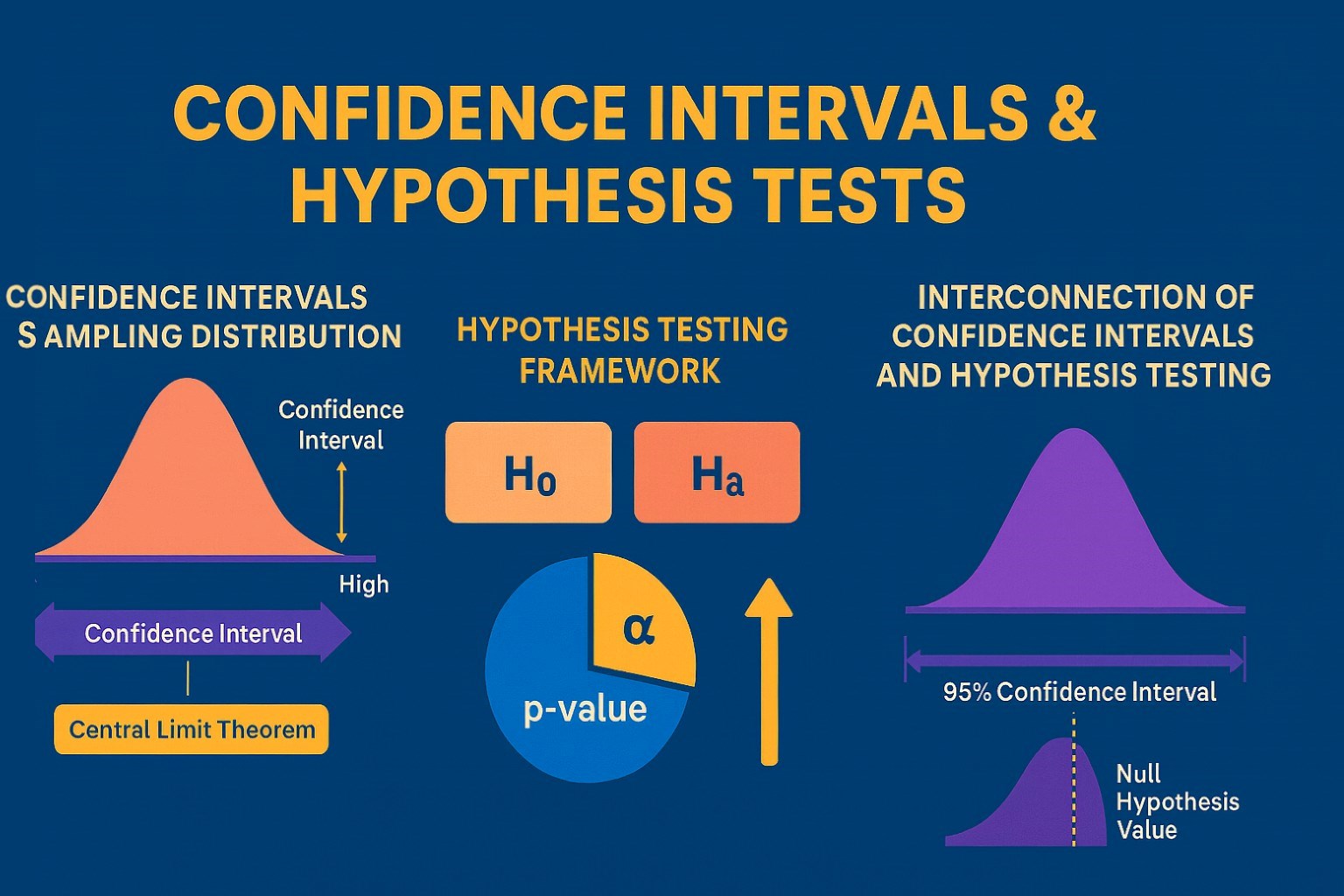

Confidence Intervals & Hypothesis Tests: The Data Science Path to Generalization

Learn how confidence intervals and hypothesis tests turn sample data into reliable population insights in data science. Understand CLT, p-values, and significance to generalize results, quantify uncertainty, and make evidence-based decisions.