How to make use of DistCp performance improvements in Hadoop?

Every Hadoop developer would be aware of the DistCp tool which is used within Hadoop clusters. With the help of this tool, the user can take effective data backups within and across the Apache Hadoop clusters. Every data backup process conducted by running DistCp is defined as a ‘backup cycle’ and this process is slower when compared to many other processes in Apache Hadoop. Despite its slow performance, the tool gained enormous significance and popularity. In this blog, let us examine its importance and effective strategies to make use of these DistCp performance improvements.

Working of DistCp

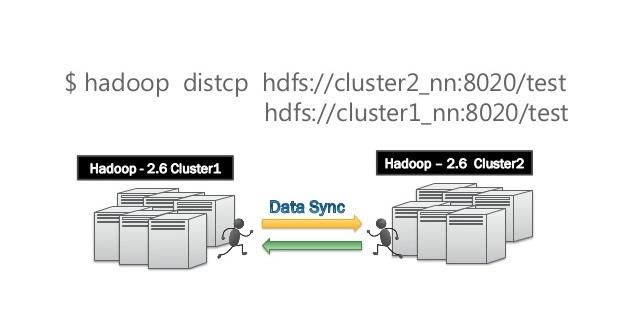

The DistCp tool uses the MapReduce jobs for copying files from clusters and the following two steps will explain how this data transfer is done.

- Firstly, DistCp creates a copy list, a list that contains the files to be copied.

- Then, it runs a MapReduce job to identify and copy the files specified in the copy list.

Note: The MapReduce job copies the files only with mappers, subsets of files specified in the copy list.

Benefits of using DistCp

Using DistCP helps the users to avoid any data inconsistency because of the content changes while copying files. This is achieved by using read-only snapshots of the directory instead of using the actual directory.

The approach followed by DistCp is proving to be very useful, particularly while copying big directories and renaming those big directories in primary clusters. DistCp will not rename the copied directory without HDFS-7535 and this will control any large volumes of real-data copied to the cluster that is already available.

Latest Improvements in DistCp

- The time consumed by DistCp to create copy list is minimized.

- The tasks that every DistCp mapper should do are reduced and optimized.

- The number of files that can work on every backup cycle is reduced.

- The memory overflow risks while processing large directories are minimized.

- The data consistency during data backup is further improved.

How to use this feature?

Following are the typical steps to be followed to use this feature,

- Firstly locate the source directory and create a snapshot with name s0.

- Use the distcp command on the command line with destination directory using the following syntax,

- distcp –update /. Snapshot/s0

- Again create a snapshot with a name s0 in your desired destination directory.

- Now make some changes in the source directory

- Create another snapshot and name it as s1 by using the following command-line syntax,

- distcp-update-diff s0 s1

- Now create another snapshot with the same name s1 in your desired destination directory.

- Repeat the steps 4 to 6 with a new snapshot name.

After following the above steps, you can clearly notice the new improvements incorporated in the DistCp.

Inspired by the world of Big Data and Hadoop? Looking for an opportunity to build a Hadoop career? Your opportunities are here…

Find a course provider to learn Hadoop

Java training | J2EE training | J2EE Jboss training | Apache JMeter trainingTake the next step towards your professional goals in Hadoop

Don't hesitate to talk with our course advisor right now

Receive a call

Contact NowMake a call

+1-732-338-7323Take our FREE Skill Assessment Test to discover your strengths and earn a certificate upon completion.

Enroll for the next batch

Hadoop Hands-on Training with Job Placement

- Nov 4 2025

- Online

Hadoop Hands-on Training with Job Placement

- Nov 5 2025

- Online

Hadoop Hands-on Training with Job Placement

- Nov 6 2025

- Online

Hadoop Hands-on Training with Job Placement

- Nov 7 2025

- Online

Related blogs on Hadoop to learn more

Hadoop Big Data Analytics Market Share, Size, and Forecast to 2030

In an era driven by data, the Hadoop Big Data Analytics market stands at the forefront of innovation and transformation. The landscape is poised for exponential growth and evolution as we peer into the future. The "Hadoop Big Data Analytics Market Sh

Hadoop Certification Dumps with Exam Questions and Answers

We have collated some Hadoop certification dumps to make your preparation easy for the Hadoop exam. The questions are multiple-choice patters and we have also highlighted the answer in bold. A brief description of the answer is also mentioned for eas

Apache Hadoop 3.1.2, the brand new software to help

The recent update of Apache Hadoop 3.1.2 had the changes software engineers always intended in the Apache Hadoop- 2. Version. This version includes improvements and additional features from the previous Apache Hadoop, This version is available (GA) a

Learning Hadoop would enhance your Big Data career!

Big Data was among the most sought after careers which are louder and deeper in recent years. Though there are many different interpretations of big data, the need to manage huge clusters of unstructured data matter in the end. Big data simply refers

Top 4 Reasons to enroll for Hadoop Training!

#4 Top Companies around the world into Hadoop Technology World's top leading companies such as DELL, IBM, AWS (Amazon Web Services), Hortonworks, MAPR Technologies, DATASTAX, Cloudera, SUPERMICR, Datameer, adapt, Zettaset, Pentaho, KARMASPHERE and m

Important Components in Apache Hadoop Stack

Apache HDFS Apache HDFS is one of the core significant technologies of Apache Hadoop which acted as a driving force for the next level elevation of Big Data industry. This cost-effective technology to process huge volumes of data revolutionized the

Apache Hadoop Essential Training Course

Learn the Fundamentals of Apache Hadoop Introduction to Apache Hadoop: This introductory class describes the students to learn the basics of Apache Hadoop. This course is a short and sweet preface to the point of Hadoop Distributed File System and

Hadoop simply dominates the big data industry!

Anyone in the data science market must have witnessed the enormous growth and popularity of Hadoop in such a short time. How Hadoop made such a drastic dominance in the big data mainstream? Let us examine the maturity of it in this blog.

Top 5 differences between Apache Hadoop and Spark

"Explore the key distinctions between Apache Hadoop and Spark in this comprehensive comparison, highlighting their unique features and applications in big data processing."

Hadoop developer among the most paid professionals

It turns out that Hadoop developers are among the top paid professionals across the world. Below is the list of most paid professions where Hadoop skills occupy most of them. MapReduce is worth $127,315

Latest blogs on technology to explore

Cyber Security in 2025: The Golden Ticket to a Future-Proof Career

Cyber security jobs are growing 35% faster than any other tech field (U.S. Bureau of Labor Statistics, 2024)—and the average salary is $100,000+ per year! In a world where data breaches cost businesses $4.45 million on average (IBM, 2024), cyber secu

SAP SD in 2025: Your Ticket to a High-Flying IT Career

In the fast-paced world of IT and enterprise software, SAP SD (Sales and Distribution) is the secret sauce that keeps businesses running smoothly. Whether it’s managing customer orders, pricing, shipping, or billing, SAP SD is the backbone of sales o

SAP FICO in 2025: Salary, Jobs & How to Get Certified

AP FICO professionals earn $90,000–$130,000/year in the USA and Canada—and demand is skyrocketing! If you’re eyeing a future-proof IT career, SAP FICO (Financial Accounting & Controlling) is your golden ticket. But where do you start? Sulekha IT Serv

Train Like an AI Engineer: The Smartest Career Move You’ll Make This Year!

Why AI Engineering Is the Hottest Skillset Right Now From self-driving cars to chatbots that sound eerily human, Artificial Intelligence is no longer science fiction — it’s the backbone of modern tech. And guess what? Companies across the USA and Can

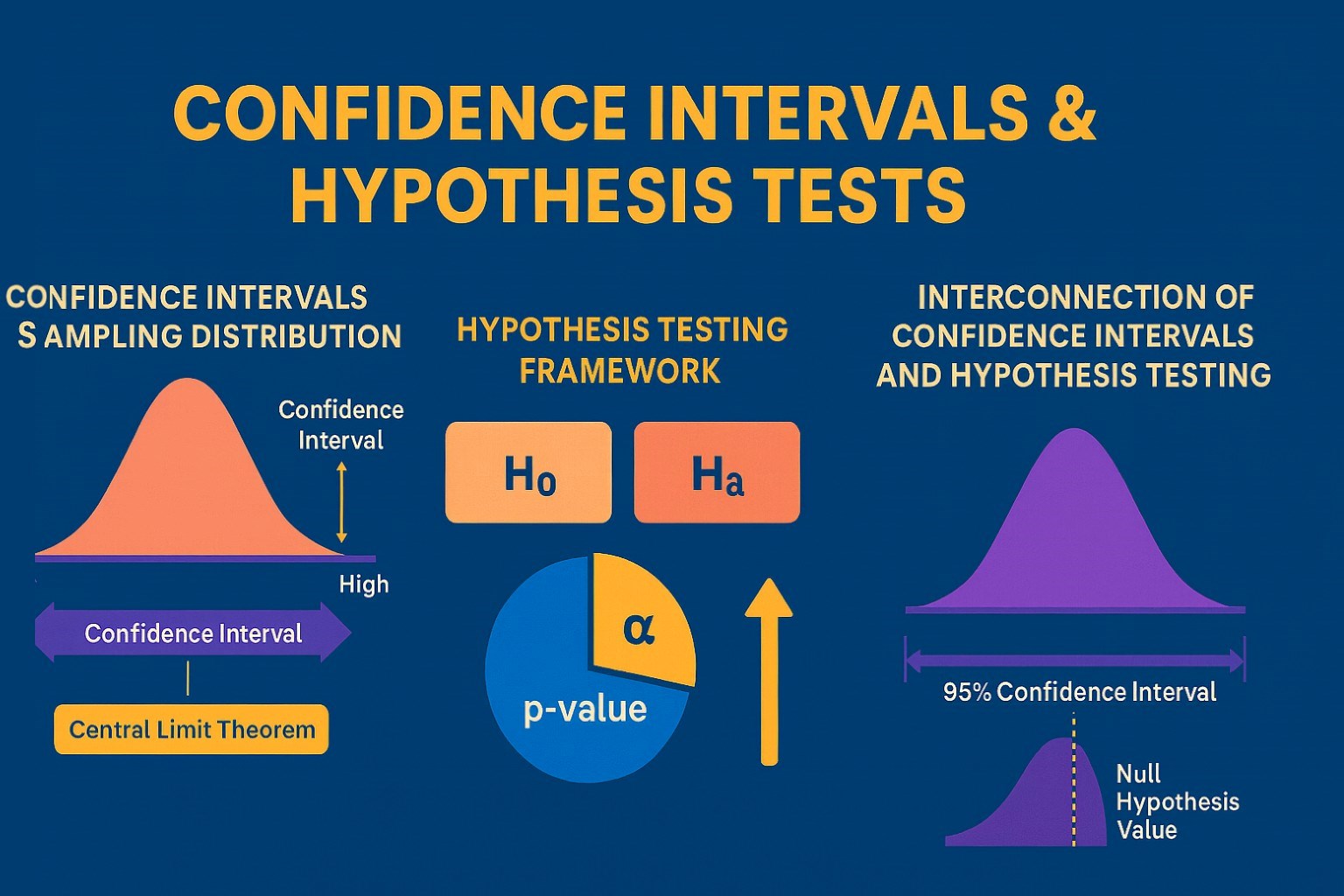

Confidence Intervals & Hypothesis Tests: The Data Science Path to Generalization

Learn how confidence intervals and hypothesis tests turn sample data into reliable population insights in data science. Understand CLT, p-values, and significance to generalize results, quantify uncertainty, and make evidence-based decisions.

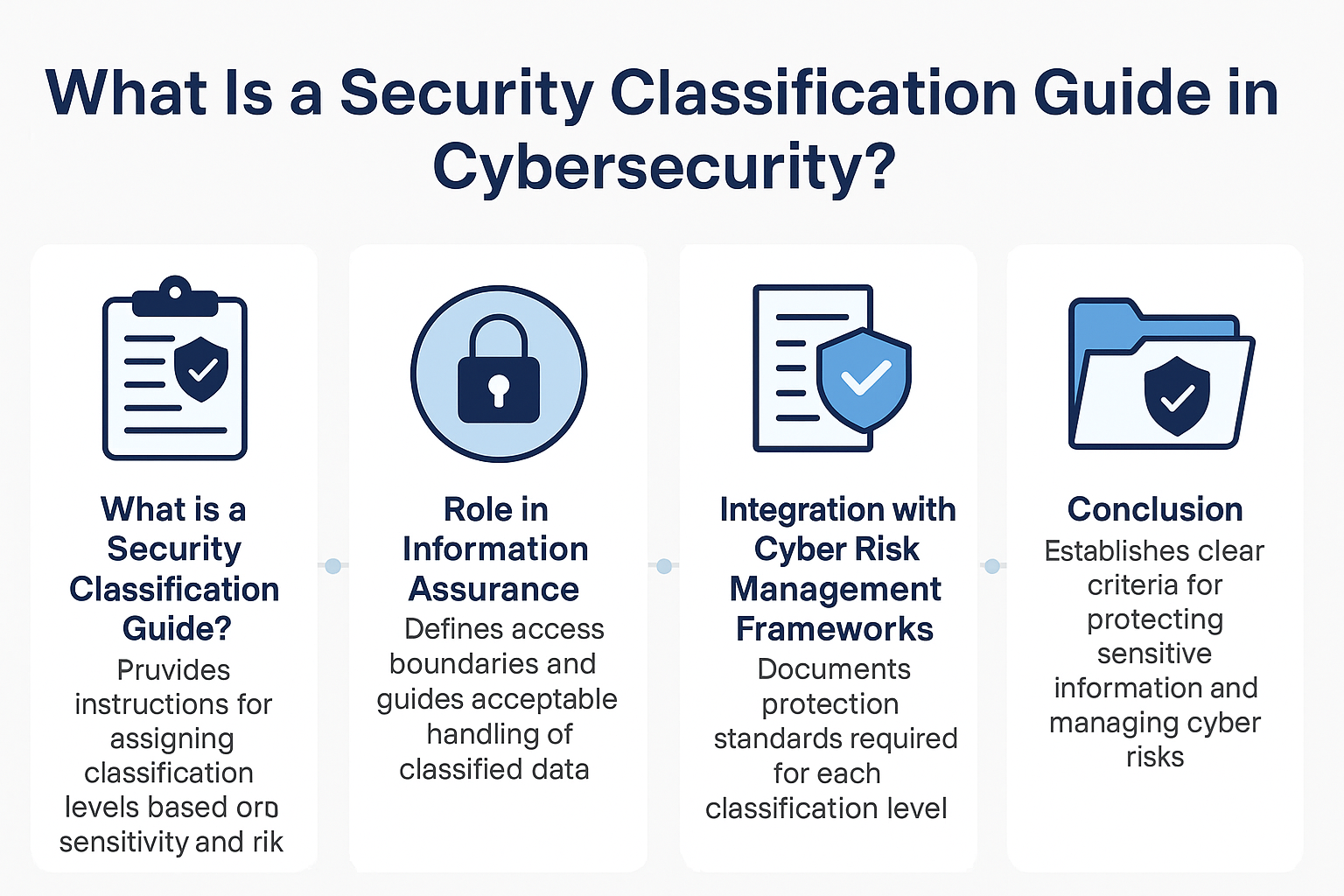

What Is a Security Classification Guide in Cybersecurity?

A Security Classification Guide (SCG) defines how to categorize information assets by sensitivity, with clear instructions from authorized officials to ensure consistent, compliant data handling.

Artificial Intelligence – Field of Study

Explore how Artificial Intelligence blends Machine Learning, Deep Learning, NLP, and Computer Vision to build intelligent systems that learn, reason, and decide. Discover real world applications, ethics, and booming career scope as AI education deman

Understanding Artificial Intelligence: Hype, Reality, and the Road Ahead

Explore the reality of Artificial Intelligence (AI) — its impact, how it works, and its potential risks. Understand AI's benefits, challenges, and how to navigate its role in shaping industries and everyday life with expert training programs

How Much Do Healthcare Administrators Make?

Discover how much healthcare administrators make, the importance of healthcare, career opportunities, and potential job roles. Learn about salary ranges, career growth, and training programs with Sulekha to kickstart your healthcare administration jo

How to Gain the High-Income Skills Employers Are Looking For?

Discover top high-income skills like software development, data analysis, AI, and project management that employers seek. Learn key skills and growth opportunities to boost your career.