Hadoop Certification Dumps with Exam Questions and Answers

Hadoop Certification

A Hadoop certification endorses your skills and will make your employment ready. You will get recognition for your knowledge with this certification.

Hadoop is an open-source Apache product which stores and processes big data. By learning the Hadoop course and obtaining the Hadoop certification, you will have the edge over your peers as enterprises and employers value your certification and lookup to your skills. Your certification will endorse the skills in HDFS, YARN, and MapReduce. You will gain comprehensive knowledge in Pig, Hive, Sqoop, Flume, Oozie, and Hbase.

Benefits of the Hadoop Certification

Hadoop certification is an endorsement of your skills in the big data stream. Certification will enhance your skills and knowledge ion the Hadoop ecosystem. Your certification will make you an expert in the big data domain. Hadoop certification helps IT professionals to transit to the big data domain. You will more be preferred and will be offered better pay.

Benefits to your Hadoop Career

The global Hadoop data analytics market is anticipated to grow at a CAGR of 36.37% from 2018-2013. The market will be worth USD 52 billion by the end of 2023. This is a welcoming note that there is enormous potential for Hadoop developers in the near future, and the demand is ever rising. Hadoop technology has wide acceptance in multiple industries like IT, Telecommunications, healthcare, transportation, manufacturing, etc.

Hadoop Certification Exam Questions Sample for Practice

We have collated some Hadoop certification dumps to make your preparation easy for the Hadoop exam. The questions are multiple-choice patters and we have also highlighted the answer in bold. A brief description of the answer is also mentioned for easy understanding and remembrance, utilizing these Hadoop certification dumps q & a you can evaluate yourself by taking Hadoop practice test. By reading through our Hadoop certification questions, you will quickly recap all the topics learned. These set of Hadoop exam questions and answers is a quick capsule to assimilate for Apache, Cloudera and Hortonworks certificate.

Exam details: https://learn.hortonworks.com/hdp-certified-developer-hdpcd2019-exam

Exam Name: Hadoop certification

Duration: 120 minutes

No. of questions:

Passing score: 70%

Validated against: Apache

Format: Multiple choice questions

Exam price: $295

--------------------------------------------------------------------------------------------------------------------------------

1. What is included in the libraries of spark?

A. SQL

B. Separate tools available

C. Streaming

D. Spark core

E. All of the above

F. Options A, C, D, and E.

Explanation- separate tools available in the library of Hadoop.

--------------------------------------------------------------------------------------------------------------------------------

2. What is the node in which Hadoop can run?

A. Standalone node

B. Fully disturbed node or multimode cluster

C. Pseudo distributed mode or single node cluster

D. All of the above

Explanation- Hadoop runs in all three nodes. In standalone nodes, input and output operation of local file system operates. These are the default node of Hadoop. Fully disturbed node is the phase in which the production of Hadoop happens. Pseudo distributed mode is the mode in which the configuration of files is required and all run in one node.

------------------------------------------------------------------------------------------------------------------------

3. What are the separate nodes used in fully distributed mode?

A. Master node

B. Slave node

C. Both of the above

D. None of the above

Explanation- as we know fully distributed mode is called a multi-node cluster. There are two separate nodes allotted for it. They are master and slave, whereas in pseudo-distributed mode both nodes are same.

-----------------------------------------------------------------------------------------------------------------------------

4. What are the formats for input in Hadoop?

A. Text input format

B. Key-value input format

C. Sequence file input format.

D. All of the above

Explanation- text input format, key-value input format, sequence file input format all three are the most common input format used in Hadoop. Text input format helps as a default input format in Hadoop. Whereas the key-value input format is used for the plain text file. This is used in the case where the file is broken into lines. Sequence file input format is used for reading files in one sequence.

--------------------------------------------------------------------------------------------------------------------------------

5. From the option mentioned below, what is the role of job tracker in Hadoop?

A. Job tracker is used for communicating with the name mode for identifying data location.

B. It is used for monitoring the individual task trackers and submitting the jobs back to the client.

C. Job tracker also helps in tracking the execution of map-reduced workload local to the slave node.

D. All of the above.

Answer- Option D

Explanation- Job tracker is used for communicating to the name mode for identifying data location. It is used for monitoring the individual task trackers and submitting the jobs back to the client. Job tracker also helps in tracking the execution of MapReduce workload local to the slave node. All these options mentioned above is the role of Job tracker in Hadoop.

----------------------------------------------------------------------------------------------------------------------------

6. What are the basic parameters of a mapper?

A. Long writable and text.

B. Text and Intwritable

C. Both of the above.

D. None of the above.

Explanation- there are two basic parameters of Mappers mentioned in Hadoop, they are long writable and text and text and Intwritable.

------------------------------------------------------------------------------------------------------------------------------

7. What are the core components of Hadoop?

A. HDFS

B. MapReduce

C. Pig

D. Hive

E. Both option A and B

F. Both option C and D.

G. All of the above

Explanation- pig, and Hive are the data components used in Hadoop. Whereas MapReduce and HDFS are core components. Also, data storage components are H Base.

----------------------------------------------------------------------------------------------------------------------------

8. What are the data components used by Hadoop?

A. HDFS

B. MapReduce

C. Pig

D. Hive

E. Both options A and B

F. Both option C and D.

G. All of the above

Explanation- pig, and Hive are the data components used in Hadoop. Whereas MapReduce and HDFS are core components. Also, data storage components are HBase. Hence, the correct answer is Option F.

--------------------------------------------------------------------------------------------------------------------------------

9. What are the data storage components that Hadoop uses?

A. HDFS

B. MapReduce

C. Pig

D. Hive

E. HBase

Explanation- pig, and Hive are the data components used in Hadoop. Whereas MapReduce and HDFS are core components. And data storage components are H Base.

--------------------------------------------------------------------------------------------------------------------------------

10. From the Option mentioned below which of the following files is configuration files for Hadoop?

A. core-site.xml

B. mapred-site.xml

C. hdfs-site.xml

D. All of the above.

Explanation- Hadoop has three configuration files, core-site.xml, mapred-site.xml, hdfs-site.xml

--------------------------------------------------------------------------------------------------------------------------

11. How many splits (input) can be made by the Hadoop framework?

A. 5

B. 3

C. 2

D. 4

Explanation- Hadoop created five splits, which includes one split for 64K files, 2 splits for 65 Mb files, and 2 splits for 127 MB files. Hence, in total it is five splits.

------------------------------------------------------------------------------------------------------------------------

12. From the option mentioned below which of the companies use Hadoop?

A. Yahoo and Facebook

B. Facebook and Amazon

C. Netflix and Adobe

D. Twitter and Adobe.

E. All of the above.

Explanation- all the companies mentioned above such as Yahoo, Facebook, Amazon, Netflix, eBay, Adobe, Spotify and Twitter are the companies that use Hadoop. The point to note is Yahoo is the biggest contributor for creating Hadoop. Search Engine for Yahoo uses Hadoop.

------------------------------------------------------------------------------------------------------------------------------

13. What is the function of namenode in Hadoop?

A. To manage the metadata.

B. To keep the track of latest checkpoint in the directory.

C. To maintain up to date data in-memory copy of the file system

D. All of the above

Explanation- To keep the track of latest checkpoint in the directory is done by checkpoint node. To maintain up to date data in-memory copy of the file system is done by the backup node. Hence the correct option is A.

---------------------------------------------------------------------------------------------------------------------------

14. What is the function of the checkpoint node?

A. To manage the metadata.

B. To keep the track of latest checkpoint in the directory.

C. To maintain up to date data in-memory copy of the file system

D. All of the above

Explanation- To manage the metadata is done by name node. To maintain up to date data in-memory copy of the file system is done by the backup node. Hence, the correct option is B.

-----------------------------------------------------------------------------------------------------------------------

15. What is the function of the backup node?

A. To manage the metadata.

B. To keep the track of latest checkpoint in the directory.

C. To maintain up to date data in-memory copy of the file system

D. All of the above

Explanation- To manage the metadata is done by name node. To maintain up to date data in-memory copy of the file system is done by the backup node. Hence, the correct option is C.

--------------------------------------------------------------------------------------------------------------------------------

16. From the option mentioned below, which is the correct feature of Hadoop (APACHE)?

A. Apache Hadoop brings flexibility in data processing as well as allows for faster data processing.

B. It helps to meet the requirements of developers for his analytical needs for roboasting ecosystem.

C. It supports POSIX style file system and extends its attributes.

D. All of the above.

Explanation - Apache Hadoop brings flexibility in data processing as well as allows for faster data processing. It helps to meet the requirements of developers for his analytical needs for roboasting ecosystem. It supports POSIX style file system and extends its attributes. Hence, the correct option is D.

---------------------------------------------------------------------------------------------------------------------

17. What are the correct situations for using the HBase?

A. When data is stored in the form of collection.

B. A schema

C. If the application demands key base access to data while retrieving.

D. All of the above.

Explanation- there are some restrictions for using the HBase in big applications. That restrictions or conditions for using HBase are hen data is stored in the form of collection, a schema, if the application demands key base access to data while retrieving. Hence, the correct option is D.

-------------------------------------------------------------------------------------------------------------------

18. From the Option mentioned below what are the key components of HBase?

A. Region

B. Region server

C. HBase master

D. Zookeeper

E. Catalog tables

F. All of the above

Explanation- region, region server, HBase master, zookeeper, catalog tables all are the components of HBase. The region contains a memory data store and H file. Region server functions for monitoring the region, HBase master takes care of monitoring the reason server. Zookeeper performs the monitoring of relation between the client and HBase master components. There are two catalog tables root and Mata. Root table tracks about the Meta table whereas Mata table stores all the reason in the system.

--------------------------------------------------------------------------------------------------------------------

19. From the option mentioned below, which of the following is the record level operational command in Hadoop HBase?

A. Put and get

B. Increment and scan

C. Disable and scan

D. Options A and B

E. Options B and C

Explanation- record level commands include put, get, increment, scan and delete. Whereas table level operational command includes describing, list, drop, disable and scan. As in option C disable is given which is not a record level operation command.

-----------------------------------------------------------------------------------------------------------------

20. From the below-mentioned option which of the following is NOT HDFS daemons?

A. Namenode and data node

B. Datanode and secondary namenode

C. Recourse manager and node manager

D. Namenode and secondary namenode

Explanation- there are two types of Hadoop daemons HDFS and YARNS. An HDFS daemon includes three daemons namenode, data node, and secondary namenode. Whereas YARNS daemons include two daemons resource manager and node manager. Option C contains the YARN daemons. Hence, it is the correct option.

---------------------------------------------------------------------------------------------------------------------

21. From the below-mentioned option which of the following is YARN daemons?

A. Namenode and datanode

B. Datanode and secondary namenode

C. Recourse manager and node manager

D. Namenode and secondary namenode

Explanation- there are two types of Hadoop daemons HDFS and YARNS. An HDFS daemon includes three daemons namenode, datanode and secondary namenode. Whereas YARNS daemons includes two daemons resource manager and node manager. Option C contains the YARN daemons. Hence, it is the correct option.

---------------------------------------------------------------------------------------------------------------------

22. From the below-mentioned option, what are the components of the region server?

A. Block cache

B. HFile

C. WAL

D. MemStore

E. All of the above.

Explanation- all the option mentioned above is considered as components of the region server.

------------------------------------------------------------------------------------------------------------------

23. Mention whether the following statement is true or false?

Flume provides 100% reliability to the data flow.

A. True

B. False

C. Partly true and partly false

D. None of the above

Explanation- Apache flumes provide end-to-end reliability as Apache flumes have its transactional approach in the data flow.

--------------------------------------------------------------------------------------------------------------------------------

24. From the below-mentioned option, which of the following incremental import is used in sqoop?

A. Append

B. Last modified

C. Mode and col

D. A and B both

E. B and C both

Explanation- sqoop support two type of incremental imports they are- Append and last modified. Append import command is used for inserting rows whereas last modified command is used for inserting the rows as well as updating it.

----------------------------------------------------------------------------------------------------------------------------

25. From the below-mentioned company which company uses Hadoop zookeeper?

A. Yahoo

B. Helprace

C. Rack space

D. All of the above.

Explanation- Yahoo, helprace and rack space, solrneo4j, are the users of Hadoop zookeeper.

-----------------------------------------------------------------------------------------------------------------------------

26. What are the data integration components of Hadoop application?

A. Sqoop and chukwa

B. Ambari and zookeeper

C. Pig and give

D. HBase

Explanation- pig and hive are the data access components of Hadoop the other hand HBase is the data storage component and last but not least, ambari and zookeeper are data management and monitoring components. Hence, the correct answer is sqoop and chukwa. Data integration components also include Apache flume.

-------------------------------------------------------------------------------------------------------------------------------

27. What are the data management and monitoring components?

A. Sqoop and chukwa

B. Ambari and zookeeper

C. Pig and give

D. HBase

Answer- B

Explanation- pig and hive are the data access components of Hadoop the other hand HBase is the data storage component and last but not least ambari and zookeeper are data management and monitoring components. sqoop and chukwa are the data integration components. Hence the correct Option is ambari and chukwa. Data management and monitoring components also include oozie.

-------------------------------------------------------------------------------------------------------------------------------

28. What are the components of data serialization?

A. Sqoop and chukwa

B. Ambari and zookeeper

C. Pig and give

D. Thrift and Avro

Explanation- pig, and hive are the data access components of Hadoop. The data storage component and last but not least ambari and zookeeper are data management and monitoring components. Sqoop and chukwa are data integration components. Hence the correct answer is D i.e., thrift and Avro.

--------------------------------------------------------------------------------------------------------------------------------

29. From the below-mentioned option, what are the data intelligence of Hadoop?

A. Sqoop and chukwa

B. Ambari and zookeeper

C. Pig and give

D. Apache mahout and drill.

Explanation- pig, and hive are the data access components of Hadoop. The data storage component and last but not least ambari and zookeeper are data management and monitoring components. Sqoop and chukwa are data integration components. Hence the correct answer is D i.e., Apache mahout and drill.

-------------------------------------------------------------------------------------------------------------------------------

30. What is the correct sequence for the steps used in deploying a big data solution?

A. Data storage, data processing, data ingestion.

B. Data ingestion, data storage, data processing

C. Data processing, data storage, data ingestion.

D. Data ingestion, data processing, data storage.

Explanation- there are three steps involved in big data solution data ingestion, data storage, and data processing. The process starts with data ingestion in which data is extracted from different sources. In the next step that is data storage in which data is stored in HDFS or NoSQL, a database like HBase. The last step is Data processing in which data is processed from one of the processing frameworks such as a spark, MapReduce, and hive.

----------------------------------------------------------------------------------------------------------------------------

31. From the Option mentioned below which company contributes highest in the Hadoop.

A. Yahoo

B. Netflix

C. eBay

D. Twitter.

Explanation- Explanation- all the companies mentioned above such as Yahoo, Facebook, Amazon, Netflix, eBay, Adobe, Spotify and Twitter are the companies that use Hadoop. The point to note is Yahoo is the biggest contributor for creating Hadoop. Search Engine for Yahoo uses Hadoop.

-----------------------------------------------------------------------------------------------------------------------------

32. From the Option mentioned below, which of the following option is included in four V"s of big data denote?

A. Volume

B. Velocity

C. Variety

D. All of the above

Explanation- the four V"s of big data denotes volume, velocity, variety, and varsity. Volume means the scale of data. Velocity means analysis of streaming data. Variety means different kind of data and varsity means uncertainty of data.

--------------------------------------------------------------------------------------------------------------------------------

33. from the option mentioned below which file format can be used in Hadoop?

A. CSV file and Jason file.

B. Avro and sequence file

C. Columnar and parquet file.

D. All of the above.

Explanation- CSV and Jason file, Avro and sequence file, columnar and parquet file are all the file formats that are accepted by Hadoop.

-------------------------------------------------------------------------------------------------------------------------------

34. From the option mentioned below, which is the steps used in deploying a big data solution?

A. Data storage,

B. Data processing

C. Data ingestion.

D. All of the above.

Explanation- there are three steps involved in big data solution data ingestion, data storage, and data processing. The process starts with data ingestion in which data is extracted from different sources. In the next step that is data storage in which data is stored in HDFS or NoSQL database like HBase. The last step is Data processing in which data is processed from one of the processing frameworks such as a spark, MapReduce, and hive.

Find a course provider to learn Hadoop

Java training | J2EE training | J2EE Jboss training | Apache JMeter trainingTake the next step towards your professional goals in Hadoop

Don't hesitate to talk with our course advisor right now

Receive a call

Contact NowMake a call

+1-732-338-7323Take our FREE Skill Assessment Test to discover your strengths and earn a certificate upon completion.

Enroll for the next batch

Hadoop Hands-on Training with Job Placement

- Dec 15 2025

- Online

Hadoop Hands-on Training with Job Placement

- Dec 16 2025

- Online

Hadoop Hands-on Training with Job Placement

- Dec 17 2025

- Online

Hadoop Hands-on Training with Job Placement

- Dec 18 2025

- Online

Hadoop Hands-on Training with Job Placement

- Dec 19 2025

- Online

Related blogs on Hadoop to learn more

Hadoop Big Data Analytics Market Share, Size, and Forecast to 2030

In an era driven by data, the Hadoop Big Data Analytics market stands at the forefront of innovation and transformation. The landscape is poised for exponential growth and evolution as we peer into the future. The "Hadoop Big Data Analytics Market Sh

Apache Hadoop 3.1.2, the brand new software to help

The recent update of Apache Hadoop 3.1.2 had the changes software engineers always intended in the Apache Hadoop- 2. Version. This version includes improvements and additional features from the previous Apache Hadoop, This version is available (GA) a

Learning Hadoop would enhance your Big Data career!

Big Data was among the most sought after careers which are louder and deeper in recent years. Though there are many different interpretations of big data, the need to manage huge clusters of unstructured data matter in the end. Big data simply refers

Top 4 Reasons to enroll for Hadoop Training!

#4 Top Companies around the world into Hadoop Technology World's top leading companies such as DELL, IBM, AWS (Amazon Web Services), Hortonworks, MAPR Technologies, DATASTAX, Cloudera, SUPERMICR, Datameer, adapt, Zettaset, Pentaho, KARMASPHERE and m

Important Components in Apache Hadoop Stack

Apache HDFS Apache HDFS is one of the core significant technologies of Apache Hadoop which acted as a driving force for the next level elevation of Big Data industry. This cost-effective technology to process huge volumes of data revolutionized the

Apache Hadoop Essential Training Course

Learn the Fundamentals of Apache Hadoop Introduction to Apache Hadoop: This introductory class describes the students to learn the basics of Apache Hadoop. This course is a short and sweet preface to the point of Hadoop Distributed File System and

Hadoop simply dominates the big data industry!

Anyone in the data science market must have witnessed the enormous growth and popularity of Hadoop in such a short time. How Hadoop made such a drastic dominance in the big data mainstream? Let us examine the maturity of it in this blog.

Top 5 differences between Apache Hadoop and Spark

"Explore the key distinctions between Apache Hadoop and Spark in this comprehensive comparison, highlighting their unique features and applications in big data processing."

Hadoop developer among the most paid professionals

It turns out that Hadoop developers are among the top paid professionals across the world. Below is the list of most paid professions where Hadoop skills occupy most of them. MapReduce is worth $127,315

Significance of Video Analytics on Hadoop

As a matter of fact, Big Data is no longer a strange term. The worldwide organizations and the world of businesses recognize it as one of the rapidly growing area in the Information Technology. Interestingly, the world keeps getting flooded with data

Latest blogs on technology to explore

From Student to AI Pro: What Does Prompt Engineering Entail and How Do You Start?

Explore the growing field of prompt engineering, a vital skill for AI enthusiasts. Learn how to craft optimized prompts for tools like ChatGPT and Gemini, and discover the career opportunities and skills needed to succeed in this fast-evolving indust

How Security Classification Guides Strengthen Data Protection in Modern Cybersecurity

A Security Classification Guide (SCG) defines data protection standards, ensuring sensitive information is handled securely across all levels. By outlining confidentiality, access controls, and declassification procedures, SCGs strengthen cybersecuri

Artificial Intelligence – A Growing Field of Study for Modern Learners

Artificial Intelligence is becoming a top study choice due to high job demand and future scope. This blog explains key subjects, career opportunities, and a simple AI study roadmap to help beginners start learning and build a strong career in the AI

Java in 2026: Why This ‘Old’ Language Is Still Your Golden Ticket to a Tech Career (And Where to Learn It!

Think Java is old news? Think again! 90% of Fortune 500 companies (yes, including Google, Amazon, and Netflix) run on Java (Oracle, 2025). From Android apps to banking systems, Java is the backbone of tech—and Sulekha IT Services is your fast track t

From Student to AI Pro: What Does Prompt Engineering Entail and How Do You Start?

Learn what prompt engineering is, why it matters, and how students and professionals can start mastering AI tools like ChatGPT, Gemini, and Copilot.

Cyber Security in 2025: The Golden Ticket to a Future-Proof Career

Cyber security jobs are growing 35% faster than any other tech field (U.S. Bureau of Labor Statistics, 2024)—and the average salary is $100,000+ per year! In a world where data breaches cost businesses $4.45 million on average (IBM, 2024), cyber secu

SAP SD in 2025: Your Ticket to a High-Flying IT Career

In the fast-paced world of IT and enterprise software, SAP SD (Sales and Distribution) is the secret sauce that keeps businesses running smoothly. Whether it’s managing customer orders, pricing, shipping, or billing, SAP SD is the backbone of sales o

SAP FICO in 2025: Salary, Jobs & How to Get Certified

AP FICO professionals earn $90,000–$130,000/year in the USA and Canada—and demand is skyrocketing! If you’re eyeing a future-proof IT career, SAP FICO (Financial Accounting & Controlling) is your golden ticket. But where do you start? Sulekha IT Serv

Train Like an AI Engineer: The Smartest Career Move You’ll Make This Year!

Why AI Engineering Is the Hottest Skillset Right Now From self-driving cars to chatbots that sound eerily human, Artificial Intelligence is no longer science fiction — it’s the backbone of modern tech. And guess what? Companies across the USA and Can

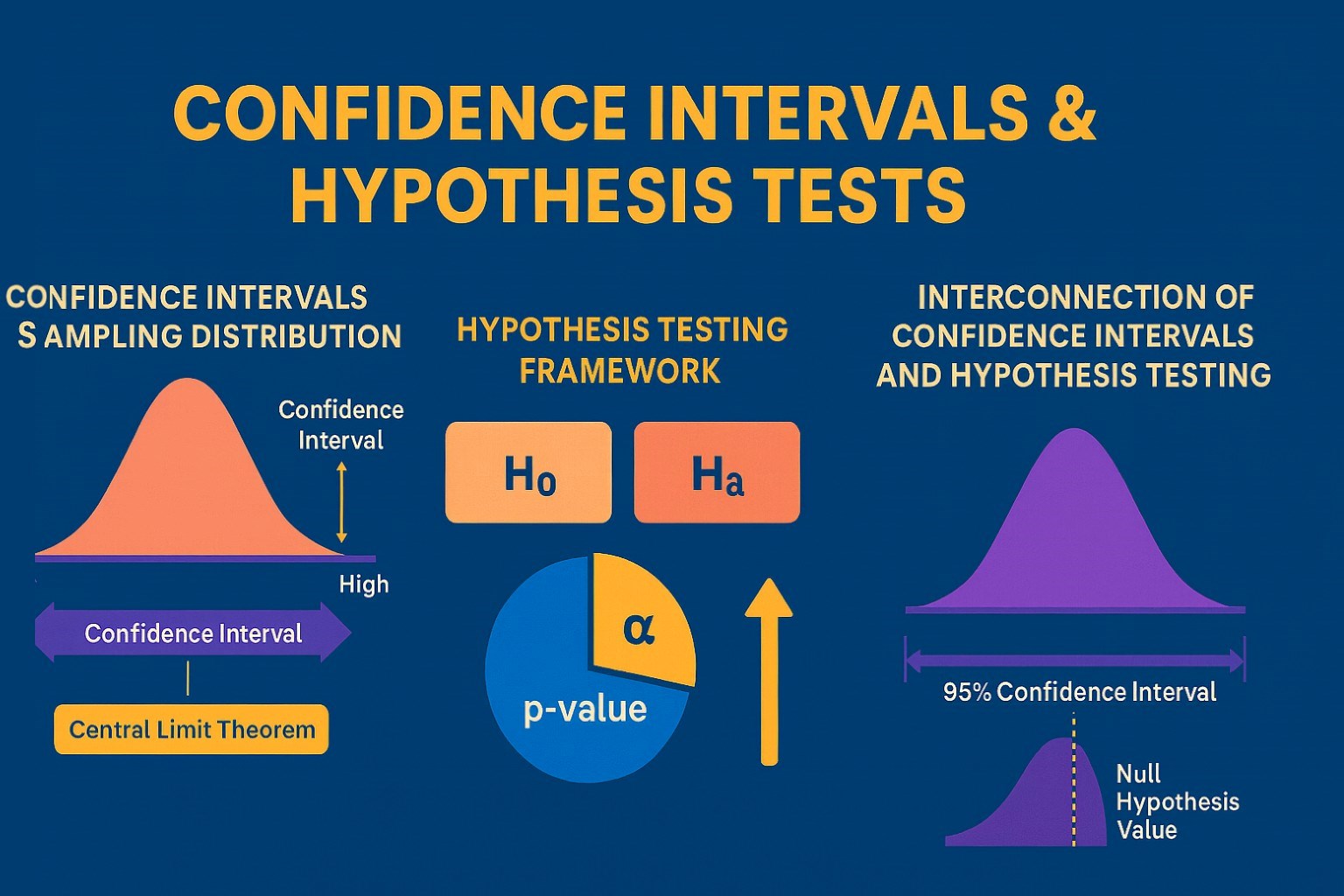

Confidence Intervals & Hypothesis Tests: The Data Science Path to Generalization

Learn how confidence intervals and hypothesis tests turn sample data into reliable population insights in data science. Understand CLT, p-values, and significance to generalize results, quantify uncertainty, and make evidence-based decisions.