Though it works similar way, big data projects needs both Apache Spark and Hadoop!

In this revolutionary era of big data technology, Hadoop and Apache Spark remains strong contenders in spite of being an open source resource. Both Hadoop and Apache Spark are products of Apache and more or less intended for similar purposes. There are plenty of differences you can notice when you learn Apache Spark and Hadoop but they are not exclusive to one another. Hadoop and Apache Spark are both Big Data frameworks–they provide some of the most popular tools used to carry out various Big Data-related tasks.

For years, Apache Hadoop remained the king of open-source Big Data framework until then the Apache Spark is released with highlighting advantages. We can say that this two foundation software from Apache is not mutually exclusive because the can effectively works together. Although Apache Spark is reported to work up to 100 times faster than Hadoop in certain circumstances, it does not provide its own distributed storage system.

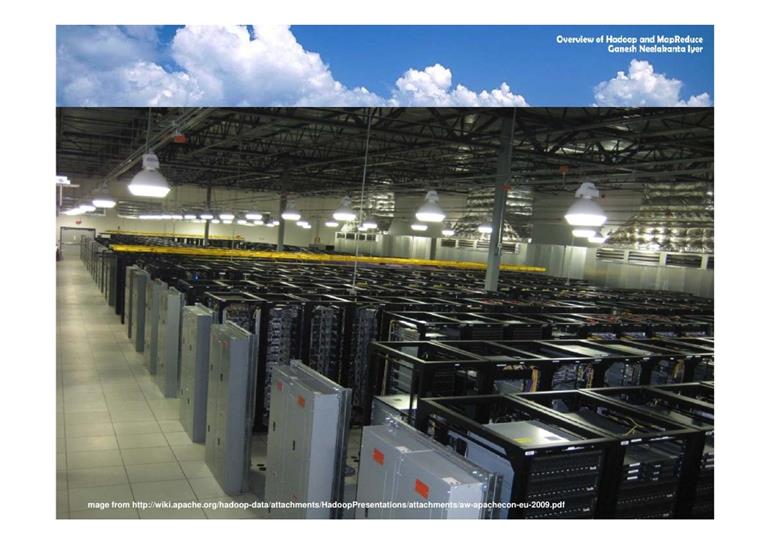

Distributed storage is fundamental to many of today’s Big Data projects as it allows vast multi-petabyte datasets to be stored across an almost infinite number of everyday computer hard drives, rather than involving hugely costly custom machinery which would hold it all on one device. These systems are scalable, meaning that more drives can be added to the network as the data set grows in size.

Apache Spark does not include its own system for organizing files in a distributed way (the file system) so it requires one provided by a third-party. For this reason, many Big Data projects involve installing Apache Spark on top of Hadoop, where Apache Spark’s advanced analytics applications can make use of data stored using the Hadoop Distributed File System (HDFS).

What really gives Apache Spark the edge over Hadoop is speed. Apache Spark handles most of its operations “in memory” – copying them from the distributed physical storage into far faster logical RAM memory. This reduces the amount of time-consuming writing and reading to and from slow, clunky mechanical hard drives that need to be done under Hadoop’s MapReduce system.

MapReduce writes all of the data back to the physical storage medium after each operation. This was originally done to ensure a full recovery could be made in case something goes wrong – as data held electronically in RAM is more volatile than that stored magnetically on disks. However, Apache Spark arranges data in what are known as Resilient Distributed Datasets, which can be recovered following failure.

Apache Spark’s functionality for handling critically advanced data processing jobs. It can perform fluently the processes such as real-time stream processing and machine learning is way ahead of what is possible with Hadoop alone. This, along with the gain in speed provided by in-memory operations, is the real reason, in my opinion, for its growth in popularity. The increasing amount of Apache Spark activity taking place (when compared to Hadoop activity) in the open source community is, in my opinion, a further sign that everyday business users are finding increasingly innovative uses for their stored data. The open source principle is a great thing, in many ways, and one of them is how it enables seemingly similar products to exist alongside each other – vendors can sell both (or rather, provide installation and support services for both, based on what their customers actually need in order to extract maximum value from their data).

Find a course provider to learn Hadoop Spark

Java training | J2EE training | J2EE Jboss training | Apache JMeter trainingTake the next step towards your professional goals in Hadoop Spark

Don't hesitate to talk with our course advisor right now

Receive a call

Contact NowMake a call

+1-732-338-7323Enroll for the next batch

Hadoop Spark Training from Experts

- Dec 10 2025

- Online

Big Data Hadoop Spark Training

- Dec 11 2025

- Online

Big Data Hadoop Spark Training

- Dec 12 2025

- Online

Related blogs on Hadoop Spark to learn more

Advanced Big Data Analytics using Apache Spark Ecosystem!

Apache Spark managed to provide several advantages over any other big data technologies such as Hadoop and MapReduce. It offers more functions and comes with optimized arbitrary operator graphs. There are many other advantages such as the following,

Benefits of using Apache Spark!

Apache Spark has become significant and familiar for it providing data engineers and data scientists, a powerful, unified engine which is fast (100 times faster than the Apache Hadoop that is for large-scale data processing) and easy to manage and us

New database solution supported by Apache Spark!

Yes, that’s right! Now Apache Spark is powering live SQL analytics in a newly unveiled database solution software called SnappyData.

Muscle-up the Apache Spark with these incredible tools!

It’s not just being faster, the Apache Spark revolutionized the world of Big Data with its incredible platform and tools. This powerful tool had impressed the world with this simpler and more convenient features. Spark isn't only one thing; it's a co

Latest blogs on technology to explore

From Student to AI Pro: What Does Prompt Engineering Entail and How Do You Start?

Explore the growing field of prompt engineering, a vital skill for AI enthusiasts. Learn how to craft optimized prompts for tools like ChatGPT and Gemini, and discover the career opportunities and skills needed to succeed in this fast-evolving indust

How Security Classification Guides Strengthen Data Protection in Modern Cybersecurity

A Security Classification Guide (SCG) defines data protection standards, ensuring sensitive information is handled securely across all levels. By outlining confidentiality, access controls, and declassification procedures, SCGs strengthen cybersecuri

Artificial Intelligence – A Growing Field of Study for Modern Learners

Artificial Intelligence is becoming a top study choice due to high job demand and future scope. This blog explains key subjects, career opportunities, and a simple AI study roadmap to help beginners start learning and build a strong career in the AI

Java in 2026: Why This ‘Old’ Language Is Still Your Golden Ticket to a Tech Career (And Where to Learn It!

Think Java is old news? Think again! 90% of Fortune 500 companies (yes, including Google, Amazon, and Netflix) run on Java (Oracle, 2025). From Android apps to banking systems, Java is the backbone of tech—and Sulekha IT Services is your fast track t

From Student to AI Pro: What Does Prompt Engineering Entail and How Do You Start?

Learn what prompt engineering is, why it matters, and how students and professionals can start mastering AI tools like ChatGPT, Gemini, and Copilot.

Cyber Security in 2025: The Golden Ticket to a Future-Proof Career

Cyber security jobs are growing 35% faster than any other tech field (U.S. Bureau of Labor Statistics, 2024)—and the average salary is $100,000+ per year! In a world where data breaches cost businesses $4.45 million on average (IBM, 2024), cyber secu

SAP SD in 2025: Your Ticket to a High-Flying IT Career

In the fast-paced world of IT and enterprise software, SAP SD (Sales and Distribution) is the secret sauce that keeps businesses running smoothly. Whether it’s managing customer orders, pricing, shipping, or billing, SAP SD is the backbone of sales o

SAP FICO in 2025: Salary, Jobs & How to Get Certified

AP FICO professionals earn $90,000–$130,000/year in the USA and Canada—and demand is skyrocketing! If you’re eyeing a future-proof IT career, SAP FICO (Financial Accounting & Controlling) is your golden ticket. But where do you start? Sulekha IT Serv

Train Like an AI Engineer: The Smartest Career Move You’ll Make This Year!

Why AI Engineering Is the Hottest Skillset Right Now From self-driving cars to chatbots that sound eerily human, Artificial Intelligence is no longer science fiction — it’s the backbone of modern tech. And guess what? Companies across the USA and Can

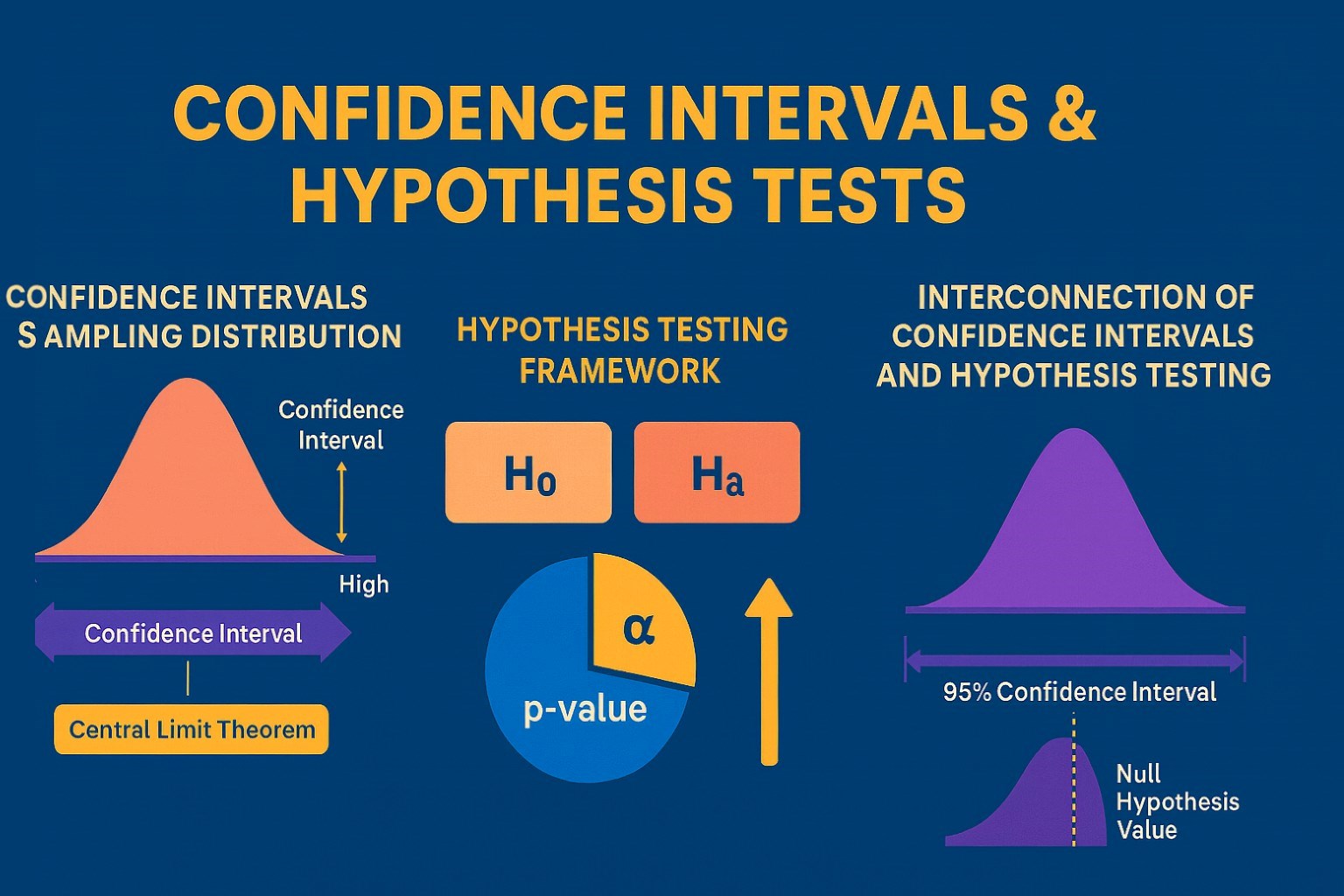

Confidence Intervals & Hypothesis Tests: The Data Science Path to Generalization

Learn how confidence intervals and hypothesis tests turn sample data into reliable population insights in data science. Understand CLT, p-values, and significance to generalize results, quantify uncertainty, and make evidence-based decisions.