4 best practices of a Hadoop Administrator!

Being one of the best open-source frameworks to manage big data in a distributed environment, Apache Hadoop keeps spreading its usage scale every other day. Thus, many database techies aspire to learn and administer Hadoop platform to survive and upgrade their career.

#4 – Stay connected to Hadoop Community

This is one of the essentials. Though the framework is built with potential features, still there are lots of bugs that need to be solved in Hadoop. It is more likely for any Hadoop administrator to encounter issues and troubles that need to be resolved. Since Hadoop is an open-source resource hence you cannot avail any instant tech support or service while using Hadoop unlike any other expensive platform. In such cases, it is wise of you to seek the help of someone in the Hadoop community. Thus, staying connected with the communities and forums pertaining to Hadoop administration and development would prove helpful in terms of connecting with the problem solvers. (Tip - Always keep yourself updated with the details of 10 Hadoop communities and forums at least)

#3 – Turn on the Trash function

Hadoop admin’s tasks are often hectic. Mistakes are certain to happen. One such mistake is accidental data deletion. While the giant Hadoop manages huge clusters of big data, often sized in terabytes, it’s too late to regret after deleting any data accidentally. There is no recycle bin or trash bin to restore the accidentally deleted data. Here comes the Trash function to the rescue. The Trash function belongs to the time machine in Hadoop which acts similar to the recycle bin in the Windows operating system. By default, this function is in off state. All you got to do is just turn on the function and enjoy the feature.

To open and activate the Trash bin, here are the simple steps.

Open Core-site.xml VI and update the below configuration settings in the value units for minutes.

<property><name>fs.trash.interval</name><value>1440</value></property>

You can open the garbage bin using the “Delete” command and use the following “skiTrash” parameter to delete the contents.

FS RM - skipTrash - /xxxx Hadoop

#2 – Using Hive or Pig

While Native Hadoop MapReduce can be developed using Java programming language, the administrator can also use Python, Shell and Perl programming languages to develop them. But instead of all, installing and using Apache Hive or Apache Pig is a wise idea that saves a lot of time and effort.

#1 - NN and DN memory

After installing the Hadoop cluster, it is very important to reconfigure the file memory settings. Depending on the number of files and its memory, the NN and DN memory might vary. The administrator needs to modify the DN and NN memory settings as per the requirement. Open the following file to edit memory settings.

bin/hadoop-evn.sh

The following memory configuration is recommended by the experts.

15-25 GB NN:JT:2-4GBGB DN:1-4GB Child, VM 1-2 GB TT:1-2

(Note: A typical cluster with huge number of small files needs at least 20GB NN memory and 2GB DN memory.)

Learning Hadoop is interesting and career promising. If you’re interested to become a Hadoop administrator, get free career-counseling here…

Find a course provider to learn Hadoop Administration

Java training | J2EE training | J2EE Jboss training | Apache JMeter trainingTake the next step towards your professional goals in Hadoop Administration

Don't hesitate to talk with our course advisor right now

Receive a call

Contact NowMake a call

+1-732-338-7323Enroll for the next batch

Hadoop Administration Certification Classes Online

- Dec 10 2025

- Online

Advance Hadoop Admin Training

- Dec 11 2025

- Online

Advance Hadoop Admin Training

- Dec 12 2025

- Online

Related blogs on Hadoop Administration to learn more

How to manage responsibilities as a Hadoop Administrator?

How to manage responsibilities as a Hadoop Administrator? Creating, editing and inspecting the Hadoop Cluster happens to be core ideologies of an Hadoop Administrator. However, cluster administration alone is not the sole responsibility of the Hadoo

Start-up activities and responsibilities of a Hadoop Admin

The world is witnessing the rapid generation of big data from various sectors and industries. And Hadoop, being the best big data management tool, it is adopted by more and more traditional enterprise IT solutions and also there is a significant incr

Now Hadoop Administrators can enjoy Google’s Dataproc!

Getting insights out of big data is typically neither quick nor easy, but Google is aiming to change all that with a new, managed service for Hadoop and Spark. Google recently declared that it would relase Cloud Dataproc which offers a Spark or Hadoo

What does Hadoop Administrators and Architects do?

Big data administrators and architects play a vital role in resolving those problems arising in big data ecosystem. Here, in this blog, let us examine how Big Data Hadoop architects and administrators handle the problems pertaining to big data.

Hadoop: A Groundbreaking Technology on its 10th Year of Success

Hadoop, the most promising and equally successful open source framework for unstructured analytics and data has completed 10 glorious years this January. There have been several milestones, major players, and some interesting events to mark the growt

Latest blogs on technology to explore

From Student to AI Pro: What Does Prompt Engineering Entail and How Do You Start?

Explore the growing field of prompt engineering, a vital skill for AI enthusiasts. Learn how to craft optimized prompts for tools like ChatGPT and Gemini, and discover the career opportunities and skills needed to succeed in this fast-evolving indust

How Security Classification Guides Strengthen Data Protection in Modern Cybersecurity

A Security Classification Guide (SCG) defines data protection standards, ensuring sensitive information is handled securely across all levels. By outlining confidentiality, access controls, and declassification procedures, SCGs strengthen cybersecuri

Artificial Intelligence – A Growing Field of Study for Modern Learners

Artificial Intelligence is becoming a top study choice due to high job demand and future scope. This blog explains key subjects, career opportunities, and a simple AI study roadmap to help beginners start learning and build a strong career in the AI

Java in 2026: Why This ‘Old’ Language Is Still Your Golden Ticket to a Tech Career (And Where to Learn It!

Think Java is old news? Think again! 90% of Fortune 500 companies (yes, including Google, Amazon, and Netflix) run on Java (Oracle, 2025). From Android apps to banking systems, Java is the backbone of tech—and Sulekha IT Services is your fast track t

From Student to AI Pro: What Does Prompt Engineering Entail and How Do You Start?

Learn what prompt engineering is, why it matters, and how students and professionals can start mastering AI tools like ChatGPT, Gemini, and Copilot.

Cyber Security in 2025: The Golden Ticket to a Future-Proof Career

Cyber security jobs are growing 35% faster than any other tech field (U.S. Bureau of Labor Statistics, 2024)—and the average salary is $100,000+ per year! In a world where data breaches cost businesses $4.45 million on average (IBM, 2024), cyber secu

SAP SD in 2025: Your Ticket to a High-Flying IT Career

In the fast-paced world of IT and enterprise software, SAP SD (Sales and Distribution) is the secret sauce that keeps businesses running smoothly. Whether it’s managing customer orders, pricing, shipping, or billing, SAP SD is the backbone of sales o

SAP FICO in 2025: Salary, Jobs & How to Get Certified

AP FICO professionals earn $90,000–$130,000/year in the USA and Canada—and demand is skyrocketing! If you’re eyeing a future-proof IT career, SAP FICO (Financial Accounting & Controlling) is your golden ticket. But where do you start? Sulekha IT Serv

Train Like an AI Engineer: The Smartest Career Move You’ll Make This Year!

Why AI Engineering Is the Hottest Skillset Right Now From self-driving cars to chatbots that sound eerily human, Artificial Intelligence is no longer science fiction — it’s the backbone of modern tech. And guess what? Companies across the USA and Can

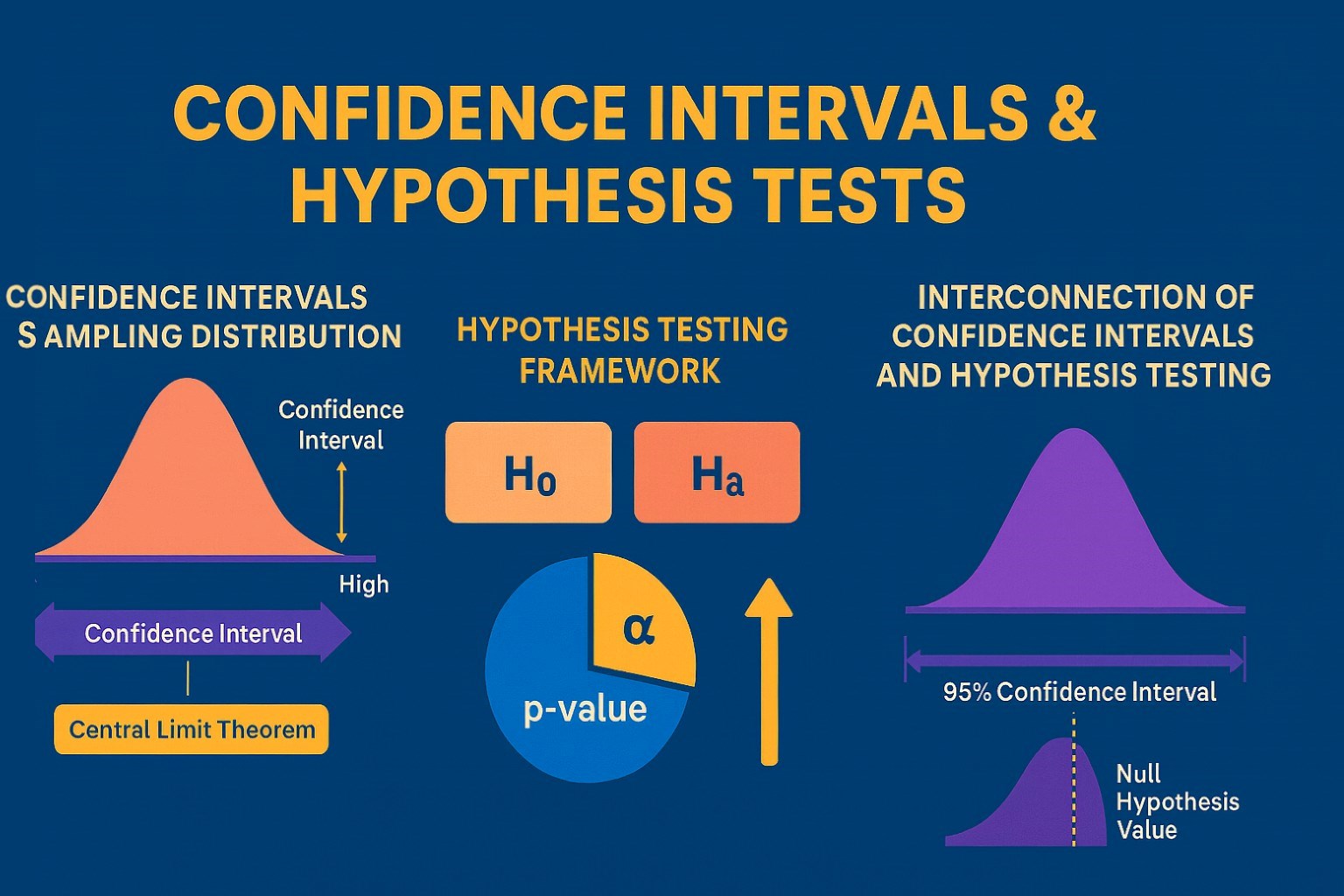

Confidence Intervals & Hypothesis Tests: The Data Science Path to Generalization

Learn how confidence intervals and hypothesis tests turn sample data into reliable population insights in data science. Understand CLT, p-values, and significance to generalize results, quantify uncertainty, and make evidence-based decisions.